What is a Robots.txt file and how to implement in website

Robots txt file in SEO

The robots.txt file is a text file that webmasters create to instruct web robots (typically search engine robots) how to crawl pages on their website. The file is part of the robots exclusion protocol, and is used to prevent search engines from accessing all or parts of a website which is otherwise publicly viewable.

Here is an example of a robots.txt file that disallows all robots from crawling any part of the website:

User-agent: *

Disallow: /

Here is an example that allows all robots to crawl all files on the website:

User-agent: *

Disallow:

It’s important to note that the robots.txt file is not a 100% reliable means of blocking access to your site. Some robots may ignore the instructions in the file, and search engines can still access your site’s pages through links from other websites, even if the search engines are disallowed in the robots.txt file.

In terms of SEO, the robots.txt file can be useful if you want to prevent search engines from crawling pages on your site that you don’t want to be indexed. However, it’s generally better to use the noindex tag or a password-protected area of your site to block search engines rather than the robots.txt file.

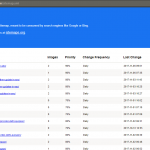

Robots.txt is a text file which in SEO used to tell Google spider (crawler) to which website page or folder, not index or crawl. Google bots follow the instruction of Robots.txt file. We can Block whole website, Particular folder or single landing page. It is a set of instructions for search engines bot. For example (https://www.abc.com/robots.txt). Robots.txt file always uploads in the root folder. We can submit in Google Webmaster tools (Google Console).

Robots.txt syntax

User-agent: This is used to give crawl instruction to a different search engine (Google, msnbot, Bing etc..)

Disallow: This command is used for Block

Allow: This command is used to all crawl of the website

Crawl-delay: This command is used to tell bots to delay crawl

Sitemap: We used the sitemap link in the robots.txt file to easily bots visit the sitemap and crawl all updated pages.

Block all Website:-

User-agent: *

Disallow: /

Crawler access allow Website:-

User-agent: *

Disallow:

Block Specific Folder:-

User-agent: *

Disallow: /example-subfolder/

Block Specific Page:-

User-agent: *

Disallow: /example-subfolder/blocked-page.html

Block Specific Crawler:-

User-agent: Googlebot

Disallow: /abc-subfolder/

Disallow: /abc-subfolder/index-page.html

I’m digital marketing expert, blogger with over 6 years of experience in helping businesses grow their online presence. Specializing in SEO, content marketing, and data-driven strategies, Atul also shares insights through blog, offering valuable tips and guidance on the latest trends in the digital marketing landscape.